Without getting into the topic of outright fraud, we know of many common problems that afflict a sizable percentage of scientific papers. One is that it has become quite common for scientists to use titles for their papers announcing results or causal claims that are not actually justfied by any data in the papers. A scientific study found that 48% of scientific papers use "spin" in their abstracts. Another problem is that scientists may change their hypothesis after starting to gather data, a methodological sin that is called HARKing, which stands for Hypothesizing After Results are Known. An additional problem is that given a body of data that can be analyzed in very many ways, scientists may simply experiment with different methods of data analysis until one produces the result they are looking for. Still another problem is that scientists may use various techniques to adjust the data they collect, such as stopping data collection once they found some statistical result they are looking for, or arbitrarily excluding data points that create problems for whatever claim they are trying to show. Then there is the fact that scientific papers are very often a mixture of observation and speculation, without the authors making clear which part is speculation. Then there is the fact that through the use of heavy jargon, scientists can make the most groundless and fanciful speculation sound as if was something strongly rooted in fact, when it is no such thing. Then there is the fact that scientific research is often statistically underpowered, and very often involves sample sizes too small to be justifying any confidence in the results.

All of these are lesser sins. But what about the far more egregious sin of outright researcher misconduct or fraud? The scientists Bik, Casadevall and Fang attempted to find evidence of such misconduct by looking for problematic images in biology papers. We can imagine various ways in which a scientific paper might have a problematic image or graph indicating researcher misconduct:

(1) A photo in a particular paper might be duplicated in a way that should not occur. For example, if a paper is showing two different cells or cell groups in two different photos, those two photos should not look absolutely identical, with exactly the same pixels. Similarly, brain scans of two different subjects should not look absolutely identical, nor should photos of two different research animals.

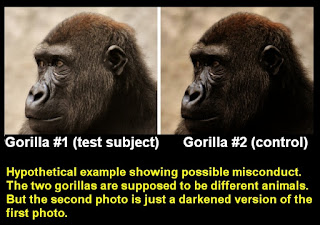

(2) A photo in a particular paper that should be different from some other photo in that paper might be simply the first photo with one or more minor differences (comparable to submitting a photo of your sister, adjusted to have gray hair, and labeled as a photo of your mother).

(3) A photo in a particular paper that should be original to that paper might be simply a duplicate of some photo that appeared in some previous paper by some other author, or a duplicate with minor changes.

(4) A photo in a particular paper might show evidence of being Photoshopped. For example, there might be 10 areas of the photo that are exact copies of each other, with all the pixels being exactly the same.

(5) A graph or diagram in a paper that should be original to that paper might be simply a duplicate of some graph or diagram that appeared in some previous paper by some other author.

(6) A graph might have evidence of artificial manipulation, indicating it did not naturally arise from graphing software. For example, one of the bars on a bar graph might not be all the same color.

There are quite a few other possibilites by which researcher misconduct could be identified by examining images, graphs or figures. Bik, Casadevall and Fang made an effort to find such problematic figures. In their paper "The Prevalence of Inappropriate Image Duplication in Biomedical Research Publications," they report a large-scale problem. They conclude, "The results demonstrate that problematic images are disturbingly common in the biomedical literature and may be found in approximately 1 out of every 25 published articles containing photographic image data."

But there is a reason for thinking that the real percentage of research papers with problematic images or graphs is far greater than this figure of only 4%. The reason is that the techniques used by Bik, Casadevall and Fang seem like rather inefficient techniques capable of finding only a fraction of the papers with problematic images or graphs. They describe their technique as follows (20,621 papers were checked):

"Figure panels containing line art, such as bar graphs or line graphs, were not included in the study. Images within the same paper were visually inspected for inappropriate duplications, repositioning, or possible manipulation (e.g., duplications of bands within the same blot). All papers were initially

screened by one of the authors (E.M.B.). If a possible problematic

image or set of images was detected, figures were further examined

for evidence of image duplication or manipulation by using the

Adjust Color tool in Preview software on an Apple iMac computer. No additional special imaging software was used. Supplementary figures were not part of the initial search but were examined in papers in which problems were found in images in the

primary manuscript."

This seems like a rather inefficient technique which would find less than half of the evidence for researcher misconduct that might be present in photos, diagrams and graphs. For one thing, the technique ignored graphs and diagrams. Probably one of the biggest possibilites of misconduct is researchers creating artificially manipulated graphs not naturally arising from graphing software, or researchers simply stealing graphs from other scientific papers. For another thing, the technique used would only find cases in which a single paper showed evidence for image shenanigans. The technique would do nothing to find cases in which one paper was inappropriately using an image or graph that came from some other paper by different authors. Also, the technique ignored supplemental figures (unless a problem was found in the main figures). Such supplemental figures are often a signficant fraction of the total number of images and graphs in a scientific paper, and are often referenced in the text of a paper as supporting evidence. So they should receive the same scrutiny as the other images or figures in a paper.

I can imagine a far more efficient technique for looking for misconduct related to imagery and graphs. Every photo, every diagram, every figure and every graph in every paper in a very large set of papers on a topic (including supplemental figures) would be put into a database. A computer program with access to that database would then run through all the images, looking for duplicates or near-duplicates in the images, as well as other evidence of researcher misconduct. Such a program might also make use of "reverse image search" capabilities available online. Such a computer program crunching the image data could be combined with manual checks. Such a technique would probably find twice as many problems. Because the technique for detecting problematic images described by Bik, Casadevall and Fang is a rather inefficient technique skipping half or more of its potential targets, we have reason to suspect that they have merely shown us the tip of the iceberg, and that the actual rate of problematic images and graphs (suggesting researcher misconduct) in biology papers is much greater than 4% -- perhaps 8% or 10%.

A later paper ("Analysis and Correction of Inappropriate Image Duplication: the Molecular and Cellular Biology Experience") by Bik, Casadevall and Fang (along with Davis and Kullas) involved analysis of a different set of papers. The paper concluded that "as many as 35,000 papers in the literature are candidates for retraction due to inappropriate image duplication." They found that 6% of the papers "contained inappropriately duplicated images." They reached this conclusion after examining a set of papers in the journal Molecular and Cellular Biology. To reach this conclusion, they used the same rather inefficient method of their previous study I just cited. They state, "Papers were scanned using the same procedure as used in our prior study." We can only wonder how many biology papers would be found to be "candidates for retraction" if a really efficient (partially computerized) method was used to search for the image problems, one using an image database and reverse image searching, and one checking not only photos but also graphs, and one also checking the supplemental figures in the papers. Such a technique might easily find that 100,000 or more biology papers were candidates for retraction.

We should not be terribly surprised by such a situation. In modern academia there is relentless pressure for scientists to grind out papers at a high rate. There also seems to be relatively few quality checks on the papers submitted to scientific journals. Peer review serves largely as an ideological filter, to prevent the publication of papers that conflict with the cherished dogmas of the majority. There are no spot checks of papers submitted for publication, in which reviewers ask to see the source data or original lab notes or lab photographs produced in experiments. The problematic papers found by the studies mentioned above managed to pass peer review despite glaring duplication errors, indicating that peer reviewers are not making much of an attempt to exclude fraud. Given this misconduct problem and the items mentioned in my first paragraph, and given the frequently careless speech of so many biologists, in which they so often speak as if unproven claims or discredited claims are facts, it seems there is a significant credibility problem in academic biology.

Postscript: In an unsparing essay entitled "The Intellectual and Moral Decline in Academic Research," PhD Edward Archer states the following:

"My experiences at four research universities and as a National Institutes of Health (NIH) research fellow taught me that the relentless pursuit of taxpayer funding has eliminated curiosity, basic competence, and scientific integrity in many fields. Yet, more importantly, training in 'science' is now tantamount to grant-writing and learning how to obtain funding. Organized skepticism, critical thinking, and methodological rigor, if present at all, are afterthoughts....American universities often produce corrupt, incompetent, or scientifically meaningless research that endangers the public, confounds public policy, and diminishes our nation’s preparedness to meet future challenges....Universities and federal funding agencies lack accountability and often ignore fraud and misconduct. There are numerous examples in which universities refused to hold their faculty accountable until elected officials intervened, and even when found guilty, faculty researchers continued to receive tens of millions of taxpayers’ dollars. Those facts are an open secret: When anonymously surveyed, over 14 percent of researchers report that their colleagues commit fraud and 72 percent report other questionable practices....Retractions, misconduct, and harassment are only part of the decline. Incompetence is another....The widespread inability of publicly funded researchers to generate valid, reproducible findings is a testament to the failure of universities to properly train scientists and instill intellectual and methodologic rigor. That failure means taxpayers are being misled by results that are non-reproducible or demonstrably false."

No comments:

Post a Comment