Occasionally a scientist will

claim to have enhanced intelligence or memory in an animal by

manipulating one or more of its genes. In this post I will discuss

why none of these claims is convincing.

One such claim was made in 1999.

The science journal Nature published a paper entitled “Genetic

Enhancement of Learning and Memory in Mice.” We read about some test in which mice that were genetically modified so that a NR2B gene was overexpressed. The paper shows a few

graphs attempting to convince us that genetically modified mice did

better in performance tests. But the results do not look very

impressive. We see only a slight difference in performance. In Figure

5 we are told “Each point represents data collected from 8-10 mice

per group.” But that is way too small a sample size for a reliable

result. The rule of thumb in animal experiments is that at least 15 animals

should be used per study group to have a decent chance of a reliable result. Figure 5 also

tells us that some performance test was based on “contextual

freezing.” Judging whether a mouse has frozen at a particular point

requires a subjective judgment where it would be very easy for

experimental bias to creep in. While the experimenters make the vague claim that "all experiments were conducted in blind fashion," we should be very skeptical of such a statement because the paper fails completely to specify any details of how any blinding protocol was followed.

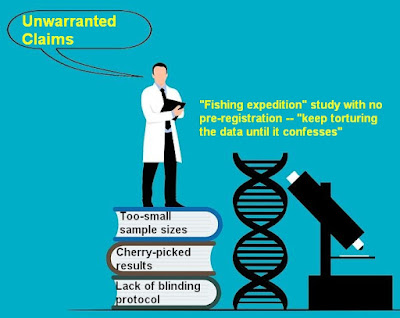

What we have in this research are the same type of Questionable Research Practices so very common in modern experimental neuroscience papers. The slight effects shown are just what we would expect to get occasionally, given a large group of neuroscientists playing around with altered genes and testing animal performance, even if all the altered genes had no effect on mental performance. Similarly, if there are 20 experimenter teams eager to prove the Super-Duper Herbal Pill makes you smarter, and those groups use small subject groups sizes smaller than 15, we would expect that one or two such groups would report a small success. Such results are plausibly attributable to chance variations in performance scores having nothing to do with the stimulus being tested.

Another paper involving this NR2B gene is the 2009 paper "Genetic Enhancement of Memory and Long-Term Potentiation but Not CA1 Long-Term Depression in NR2B Transgenic Rats." What we have in the paper is another example of Questionable Research Practices, one even worse than the previously discussed example. In the 2009 paper the study group sizes are even smaller than in the 1999 paper. The paper mentions ridiculously small study group sizes such as 3 rats, 4 rats and 5 rats. There is some graph showing genetically modified rats performing better in the Morris Water Maze test, a test of memory. How many rats were tested to get this result? The paper does not tell us, and merely uses the vague term "rats" without telling us how many rats were tested (usually a sure sign that a way-too-small study group size was used). Because the paper has previously mentioned absurdly small study group sizes such as 3 rats, 4 rats and 5 rats, we may presume that the unspecified number of rats in the Morris Water Maze test was some equally way-too-small study group size such as 3, 4 or 5 rats. A result produced with such way-too-small study group sizes is worthless, because you would expect to get by it chance (due to random performance variations), given 10 or 20 tests with random rats, even if the genetic modification had no effect at all on performance.

Then there's a year 2000 study "Enhanced learning after genetic overexpression of a brain growth protein." The study has some graphs showing 4 groups of mice, with better performance by one group. But the paper tells us that each of these groups consisted of only 5 mice. This sample size is way too small for a reliable result, and the performance difference is probably just a false alarm, as we would expect to get from random performance variations that could easily show with small sample sizes.

A very misleading 2007 press release from the UT Southwestern Medical Center was repeated on the Science Daily site (www.sciencedaily.com), which has proven over the years to be a site where misleading academia PR gets repeated word-for-word without challenge. The story appeared on the Science Daily site with the headline "'Smart' Mice Teach Scientists About Learning Process, Brain Disorders." In the press release we hear a James Bibb making statements about genetically modified mice that are not warranted by anything found in the research paper he co-authored. He absurdly states "everything is more meaningful to these mice." Then he states, "The increase in sensitivity to their surroundings seems to have made them smarter." Later (dropping the "seems") he states, "We made the animals 'smarter.' " Very conveniently, the Science Daily story includes no link to the scientific paper, making it hard for people to find out how groundless such claims are. The story mentions mice modified to have no cdk5 enzyme.

But by looking up James Bibb on Google Scholar, I can the find the 2007 paper that the press release is based on, a paper entitled "Cyclin-dependent kinase 5 governs learning and synaptic plasticity via control of NMDAR degradation." The paper discusses tests with mice that had been modified to have no cdk5 enzyme. The paper gives us another example of a poorly designed experiment involving Questionable Research Practices. If we ignore a test that produced no significant difference, we find the study group sizes used to test mouse intelligence and memory were only 10, 11, and 12, way too small to be anything like a robust demonstration of a real effect. Most of the graphs show only a minor difference between the performance of the genetically engineered mice and normal mice. The study was not pre-registered, and did not use any blinding protocol. Using the term "both genotypes" to refer to the normal mice and the genetically engineered mice, the paper confesses, "Both genotypes showed equally successful learning during initial training sessions." No robust evidence has been published that any smarter mice or better-remembering mice were produced.

There is a general reason why slight results like the ones resulted in such papers are not convincing evidence of an extraordinary claim such as the claim that an increase in intelligence or memory was genetically engineered. The reason is that if many experiment tests are done, it would be a very easy for some experimenter to get a small-sample modest effect result that is purely due to chance, not to some effect claimed by the experimenter. For example, imagine I wanted to claim that eating some food increased someone's psychokinetic mind-over-matter powers. I could do 20 dice-throwing experiments, each done after a small number of subjects (such as 5 or 10) ate a different food. Purely by chance I would probably get a random variation, and then I could say something like, “Aha, I've proved it – asparagus increases your mind-over-matter powers.” I could then ship that test with asparagus off to a scientific journal. But this type of limited test is never sufficient to establish such an extraordinary claim. The results would be not much better than you would expect to get by chance, given that number of experiments and small samples.

Similarly, you should never be impressed by hearing that some scientists testing with one particular gene got some slight increase in performance in a few tests involving small numbers of subjects. We would not have convincing evidence that some mice actually had their intelligence or memory increased unless scientists had shown something like one of these:- A large and dramatic performance increase shown in repeated tests by several replicated scientific studies using at least 25 or 35 subjects: or

- A moderate but very substantial increase in performance shown by a large number of scientific studies (such as 10 or 20) each using a fairly good sample size (such as 30 or 50); or

- A moderate but very substantial increase in performance shown by several scientific studies each using a good sample size such as 100 or 200.

No such result has occurred in the literature. The studies claiming cognitive enhancements in genetically altered animals are typically junk science studies using way-too-small study group sizes and having multiple other deficiencies.

In

2014 some scientists claimed that they had made mice smarter by

inserting a human gene into them. A popular press account of the

scientists' paper had

the bogus headline “Mice Given Gene From Human Brains Turn Out To

Be Super-Smart.” But that's not what the scientific paper reported. In fact,

the scientists reported that “we did not observe enhanced learning”

in these genetically engineered mice with a human gene “in the

response-based task or the place-based task.” Referring to the

genetically enhanced mice and the normal mice as “the two

genotypes,” the scientists also reported, “The two genotypes

exhibited equivalent procedural/response-based learning as assessed

with the accelerating rotarod protocol, the tilted running wheel

test, the T-maze protocol in which extramaze cues had been removed,

and the procedural/response-based version of the cross-maze task.”

In Figure 1, the paper has two graphs comparing the performance of

the genetically enhanced mice in a maze to the performance of mice

without the genetic enhancement. In one graph, the genetically

enhanced mice did a little better, and in the second graph they did a

little worse. This is not at all compelling evidence of increased

intelligence.

A large and dramatic performance increase shown in repeated tests by several replicated scientific studies using at least 25 or 35 subjects: or

A moderate but very substantial increase in performance shown by a large number of scientific studies (such as 10 or 20) each using a fairly good sample size (such as 30 or 50); or

A moderate but very substantial increase in performance shown by several scientific studies each using a good sample size such as 100 or 200.

As so often happens nowadays in neuroscience literature, the authors gave their paper a title that was not warranted by the data collected. The title they gave is "Humanized Foxp2 accelerates learning by enhancing transitions from declarative to procedural performance." That title in inappropriate given the results described above. The paper mentions no blinding protocol, meaning it failed to follow best practices for experimental research. The study group sizes were sometimes as high as 21 but often much smaller. A result such as this could very easily have been produced by chance variations having nothing to do with the difference in genomes.

What we see in some of these studies is a very creative searching for superior cognitive performance after animals are tested, by graphing rarely-graphed performance numbers. There are many ways of testing learning, intelligence and memory in small animals. Eleven different methods are discussed on the page here. Each test offers multiple different things that can be measured. For example, in the Elevated Plus Maze test described here, there are at least six different performance numbers that could be gathered and graphed: the duration and entry frequency numbers graphed here, and the % open arms number, the % closed arms number, the number of head dips, and the number of passages numbers graphed here. With at least eleven different possible performance tests each offering five or more numbers that can be graphed, it seems that someone testing learning or memory in a rodent can have up to fifty or more different performance measures that he can graph in his scientific paper.

So should we be impressed when some paper testing genetically modified rodents shows a few graphs showing superior performance from genetically modified rodents? Not at all. Given random performance variations from a variety of tests, with no effect at all from genetic modifications, we would expect that merely by chance a few of the numbers for the genetically modified rodents would be better. And given a situation where the writers of scientific papers have the freedom to cherry-pick whatever results look most favorable to some hypothesis they are claiming (because they did not pre-register beforehand exactly which results they would be graphing), we would expect such to-be-expected-by-chance results to show up as being graphed in their papers. Similarly, if I have the freedom to cherry-pick any five graphs out of 50 possible graphs that could be made numerically comparing the quality of life in the United States and life in Afghanistan, I could probably create the impression that those in Afghanistan have a superior quality of life than those in the United States.

Imagine you are comparing two baseball teams for which plays baseball better. There is one generally recognized way of judging which team is better: their position in the team standings. But imagine some baseball scholar has the freedom to compare baseball teams in any of 40 different ways, using metrics such as the total runs scored per season, the average runs scored per game, the total number of games won, the total number of strikeouts per game, the average time spent on a base, the total number of walks, the total number of caught balls, the total number of bat swings, the total number of game throws (practice or non-practice), the cleanliness of uniforms, the average time spent in the field, the average time spent in the batter box, and so on and so forth. By cherry-picking 4 or 5 of these statistics and displaying them in graphs, the baseball scholar could make an average team look like some superior team. Similarly, with the freedom to graph any of about 50 different numbers gathered from any of 10 different experiments, any researcher can make some genetically engineered mice look as if they have better intelligence or memory, even if there is no robust evidence for superior intelligence or memory. In some of the papers discussed above, we do see scientists graphing rarely-used performance metrics, in shady "grasping at straws" attempts to show superiority of their genetically engineered mice.

If he is doing some low-statistical-power study using way too few subjects for a robust result, the person trying to get evidence for some unfounded claim is always greatly helped if he uses some test in which results vastly differ by 1000% or more from animal to animal of the same species. That way such a person will be much more likely to get some false alarm result caused by one or two random-variation outliers that cause some average to be raised. The Morris Water Maze test (the main test used to test rodent memory) is such a test. Table 1 of the paper here shows that when such a test is given to 46 ordinary rats, the results differ from animal to animal by about 3000% or more, with some animals taking only a few seconds to find the hidden platform, and other animals not finding it in even two minutes. A similar situation occurs with mazes used to test intelligence, which produce vast variations in performance (1000% or more) from animal to animal when normal animals of the same species are tested. We should always suspect false alarm results when such tests are done with study group sizes smaller than 15 (and a much higher study group size would be needed for a robust result).

No matter how long you search, you will not be able to find any well-designed and well-replicated scientific studies with good study group sizes showing any robust evidence for superior intelligence or superior memory in any animals because of genetic engineering.

No comments:

Post a Comment