Recently they announced the 2024 nominations for the Golden Raspberry Awards, informally called the Razzies. These are awards for the worst movies of the year. A web page tells us this:

"The most-nominated film is 'Expend4bles,' the fourth entry in the action-packed, but critically underwhelming, 'The Expendables' franchise. It received seven nominations. Tied for second place with five noms are 'The Exorcist: Believer,' the revival of the classic horror series, and 'Winnie the Pooh: Blood and Honey,' a blood-soaked take on everyone’s favorite honey-loving bear. Two big-budget superhero movies, DC’s 'Shazam! Fury of the Gods' and Marvel’s 'Ant-Man and the Wasp: Quantumania,' both got four nominations."

In my February 2021 post "The Poor Design of the Latest Mars Mission," written just after the Perseverance rover landed on Mars, I said that because of the poor design of the Perseverance mission, "you will not be hearing any 'NASA discovered life on Mars' announcement anytime in the next few years." So far that prediction has held up. A NASA page said that the "Perseverance Rover will search for signs of ancient microbial life." But no such signs have been found.

A February 2023 article on Science News has the title "What has Perseverance found in two years on Mars?" The long answer basically amounts to: nothing of interest to the general public. Some scientist named Horgan claims, "We’ve had some really interesting results that we’re pretty excited to share with the community." But in the long article we read of no interesting results. The article tries to get us interested by statements like a statement that the Perseverance rover "has found carbon-based matter in every rock" it analyzed. So what? Carbon is an extremely common element in the universe, and is found in many lifeless places.

The Perseverance rover mission to Mars rover always had an extremely strange design. The main business of the Perseverance rover has been to dig up soil and put it into soil sample tubes that would simply be dumped on Mars, in hopes that a later mission would retrieve the tubes. The mission design has always seemed utterly bizarre. Why send a rover to Mars to put soil samples into tubes for later retrieval by another spacecraft, when any newly arriving spacecraft could simply dig up Mars soil at the spot it landed, rather than try to find and retrieve such tubes filled up with soil years earlier?

The estimated cost of the mission to retrieve these filled tubes has been skyrocketing, rising to more than ten billion dollars. A July article headline read "NASA's Mars Sample Return in jeopardy after US Senate questions budget." Below that headline we read, "If NASA doesn't come up with a tighter budget for the mission, Mars Sample Return may not happen." The other day the headline below appeared in a LiveScience article, saying some are calling the NASA sample return plan "a dumpster fire."

The LiveScience article gives us a clue about how NASA got into its current mess about the sample return mission, making it sound like maybe big lying is going on at the agency, in regard to low-balling cost estimates in order to get funding (a type of lie that defense contractors frequently commit). We read this about the MSR (Mars Sample Return mission):

The term "dumpster fire" would be appropriate to use not just in referring to some Mars sample return plan, but also in referring to the design of the Perseverance mission itself. An exchange like the one below would candidly reveal how bad the mission design was, but you'll never hear so candid an exchange in a NASA congressional hearing:

Senator: So being very concise, in a nutshell, what did we get out of the billions we spent on the Perseverance mission?

NASA Official: Dumped dirty tubes.

- Selectively deleting data to help reach some desired conclusion or a positive result, perhaps while using "outlier removal" or "qualification criteria" to try to justify such arbitrary exclusions, particularly when no such exclusions were agreed on before gathering data, or no such exclusions are justifiable.

- Selectively reclassifying data to help reach some desired conclusion or a positive result.

- Concealing results that contradict your previous research results or your beliefs or assumptions.

- Failing to describe in a paper the "trial and error" nature of some exploratory inquiry, and making it sound as if you had from the beginning some late-arising research plan misleadingly described in the paper as if it had existed before data was gathered.

- Creating some hypothesis after data has been collected, and making it sound as if data was collected to confirm such a hypothesis (Hypothesizing After Results are Known, or HARKing).

- "Slicing and dicing" data by various analytical permutations, until some some "statistical significance" can be found (defined as p < .05), a practice sometimes called p-hacking.

- Requesting from a statistician some analysis that produces "statistical significance," so that a positive result can be reported.

- Deliberately stopping the collection of data at some interval not previously selected for the end of data collection, because the data collected thus far met the criteria for a positive finding or a desired finding, and a desire not to have the positive result "spoiled" by collecting more data.

- Failing to perform a sample size calculation to figure out how many subjects were needed for a good statistical power in a study claiming some association or correlation.

- Using study group sizes that are too small to produce robust results in a study attempting to produce evidence of correlation or causation rather than mere evidence of occasional occurrence.

- Use of unreliable and subjective techniques for measuring or recording data rather than more reliable and objective techniques (for example, attempting to measure animal fear by using subjective and unreliable judgments of "freezing behavior" rather than objective and reliable measurements of heart rate spikes).

- Failing to publicly publish a hypothesis to be tested and a detailed research plan for gathering and interpreting data prior to the gathering of data, or the use of "make up the process as you go along" techniques that are never described as such.

- Failure to follow a detailed blinding protocol designed to minimize the subjective recording and interpretation of data.

- Failing to use control subjects in an experimental study attempting to show correlation or causal relation, or failure to have subjects perform control tasks. In some cases separate control subjects are needed. For example, if I am testing whether some drug improves health, my experiment should include both subjects given the drug, and subjects not given the drug. In other cases mere "control tasks" may be sufficient. For example, if I am using brain scanning to test whether recalling a memory causes a particular region of the brain to have greater activation, I should test both tasks in which recalling memory is performed, and also "control tasks" in which subjects are asked to think of nothing without recalling anything.

- Using misleading region colorization in a visual that suggests a much greater difference than the actual difference (such as showing in bright red some region of a brain where there was only a 1 part in 200 difference in a BOLD signal, thereby suggesting a "lighting up" effect much stronger than the data indicate).

- Failing to accurately list conflicts of interests of researchers such as compensation by corporations standing to benefit from particular research findings or owning shares or options of the stock of such corporations.

- Failing to mention (in the text of a paper or a chart) that a subset of subjects were used for some particular part of an experiment or observation, giving the impression that some larger group of subjects was used.

- Mixing real data produced from observations with one or more artificially created datasets, in a way that may lead readers to assume that your artificially created data was something other than a purely fictional creation.

- The error discussed in the scientific paper here ("Erroneous analyses of interactions in neuroscience: a problem of significance"), described as "an incorrect procedure involving two separate tests in which researchers conclude that effects differ when one effect is significant (P < 0.05) but the other is not (P > 0.05)." The authors found this "incorrect procedure" occurring in 79 neuroscience papers they analyzed, with the correct procedure occurring in only 78 papers.

- Exposing human research participants to significant risks (such as exposure to lengthy medically unnecessary brain scans) without honestly and fully discussing the possible risks, and getting informed consent from the subjects that they agree to being exposed to such risks.

- Failing to treat human subjects in need of medical treatment for the sake of some double-blind trial in which half of the sick subjects are given placebos.

- Assuming without verification that some human group instructed to do something (such as taking some pill every day) performed the instructions exactly.

- Speaking as if changes in some cells or body chemicals or biological units such as synapses are evidence of a change produced by some experimentally induced experience, while ignoring that such cells or biological units or chemicals undergo types of constant change or remodeling that can plausibly explain the observed changes without assuming any causal relation to the experimentally induced experience.

- Selecting some untypical tiny subset of a much larger set, and overgeneralizing what is found in that tiny subset, suggesting that the larger set has whatever characteristics were found in the tiny subset (a paper refers to "the fact that overgeneralizations from, for example, small or WEIRD [ Western, Educated, Industrialized, Rich, and Democratic] samples are pervasive in many top science journals").

- Inaccurately calculating or overestimating statistical significance (a paper tells us "a systematic replication project in psychology found that while 97% of the original studies assessed had statistically significant effects, only 36% of the replications yielded significant findings," suggesting that statistical significance is being massively overestimated).

- Inaccurately calculating or overestimating effect size.

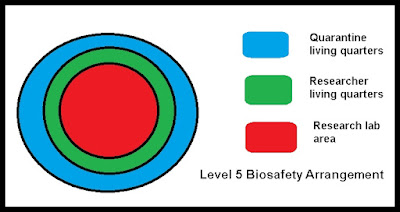

There is a system for classifying the security of pathogen labs, with Level 4 being the highest level currently implemented. It is often claimed that Level 4 labs have the highest possible security. That is far from true. At Level 4 labs, workers arrive for shift work, going home every day, just like regular workers. It is easy to imagine a much safer system in which workers would work at a lab for an assigned number of days, living right next to the lab. We can imagine a system like this:

Under such a scheme, there would be a door system preventing anyone inside the quarantine area unless the person had just finished working for a Research Period in the green and red areas. Throughout the Research Period (which might be 2, 3 or 4 weeks), workers would work in the red area and live in the green area. Once the Research Period had ended, workers would move to the blue quarantine area for two weeks. Workers with any symptoms of an infectious disease would not be allowed to leave the blue quarantine area until the symptoms resolved.

There are no labs that implement such a design, which would be much safer than a Level 4 lab (the highest safety now used). I would imagine the main reason such easy-to-implement safeguards have not been implemented is that gene-splicing virologists do not wish to be inconvenienced, and would prefer to go home from work each night like regular office workers. We are all at peril while they enjoy such convenience. Given the power of gene-splicing technologies such as CRISPR, and the failure to implement tight-as-possible safeguards, it seems that some of today's pathogen gene-splicing labs are recklessly playing "megadeath Russian Roulette."

Failing to fully protect against pathogen release accidents, partially because of their not-safe-enough physical designs, today's pathogen labs engaging in "gain of function" research are a "dumpster fire" of bad design. We all may "burn up" by some pandemic coming from such labs.

No comments:

Post a Comment