In September 2019 I published a post entitled "Contrarian Predictions Regarding Biology, the Brain and Technology." In the post I made 11 predictions. So far my predictions are holding up very well, although you might think otherwise if you fall "hook, line and sinker" for some of the hype and bluff that occurs in the press. The sixth of my 11 predictions was this: "It will never be possible to make computers with a general purpose intelligence." This prediction still holds up very well, although you might think otherwise if you have been fooled by some of the recent hype involving new so-called "artificial intelligence" systems such as ChatGPT.

Every time someone uses the phrase "artificial intelligence" or AI to describe any existing system, they are using a phrase that should not be used. "Artificial intelligence" is just a misleading phrase used to describe systems that are no more intelligent or conscious than a rock. The systems called "artificial intelligence" are merely systems that combine computer programming and data processing.

The average person has little understanding of the enormous potential power of computer programming and data processing, so such a person may be very impressed when he tries using some system such as ChatGPT or Google's new Bard system. To help demystify things, let us look at some of the vast power of a long-standing technology called relational database technology.

Relational database technology allows you to create databases that are centered around tables. A relational database may consist of any number of tables, and each table can have as many as 1024 columns and almost any number of rows. For many years relational database technology has been the main way in which corporations have stored their data.

There are some great ironies involved in the origin of relational database technology. The grand idea behind the technology was first conceived by E. F. Codd, who worked for IBM. Codd wrote a breakthrough paper spelling out how to make a relational database in 1970. IBM was slow to capitalize on this breakthrough, although it eventually did. While IBM was dragging its heels, people such as Larry Ellison (the founder of Oracle) realized that Codd's idea offered a vast opportunity for computer progress, particularly when combined with an easy-to-use language called SQL that would make it easy to query relational databases. Ellison ended up making countless billions from Codd's idea, although Codd apparently never even got rich from his breakthrough. A web page says, " Codd never became rich like the entrepreneurs like Larry Ellison, who exploited his ideas."

The wonderful thing about relational database technology is that it is very easy to use, because of a technology called SQL that makes it easy to get information from the tables in relational databases. For decades relational databases and SQL have been the backbone of data storage for corporations. Typically a corporation may have a database with information such as this:

- A table listing all of the vendors it deals with.

- A table listing all of the customers who have ever bought anything from the company or paid any money to it for any service.

- A table listing all of the products or services the corporation has.

- A table listing all of the employees of the corporation.

- A table listing every order any one ever made from the company.

- A table listing the exact details of every part of every such order.

Now every single time that the records or books or websites mentioned that a particular person was at some particular place on some particular day, a row would be added to this table. Rows would be added for every time period, not just the present. So there would be some particular row in this table recording that Julius Caesar was in Rome on March 15, 44 BC (the date Caesar was assassinated). Similarly, a particular table could be created to store records of any meetings ever recorded on social media. So if you put up a Tweet claiming that you went to the Taylor Swift concert in Miami on some particular day, a row would end up in this Person_Visitation row.

There would be another table called Meeting:

and a detail table called MeetingAttendees:

)

)

- A phrase or sentence ending with a question mark, followed by some lines of text.

- A header beginning with the words "How" or "Why" and followed by some lines of text (for example, a header of "How the Allies Expelled the Nazis from France" followed by an explanation).

- A header not beginning with the words "How" or "Why" and not ending with a question mark, but followed by some lines that can be combined with the header to make a question and answer (for example, a header of "The Death of Abraham Lincoln," along with a description, which could be stored as a question "How did Abraham Lincoln die?" and an answer).

- A header written in the form of a request or an imperative, and some lines following such a header (for example a header of "write a program that parses a test line and says 'you mentioned a fruit' whenever the person mentioned a fruit" would be stored so that the header was converted to a question of "how do you write a program" and the solution stored as the answer.

Crawling the entire Internet and vast online libraries of books such as www.archive.org and Google Books, the system could create a database of hundreds of millions or possibly even billions of questions and answers. In many cases the database would have multiple answers to the same question. But there could be some algorithm that would handle such diversity. The system might give whichever type of answer was the most popular. Or it might choose one answer at random. Or it might give an answer giving multiple answers, adding text such as "Some people say..." or "It is generally believed" and "Some people say." Included in this question and answer database would be the answer to almost riddle ever posed. So suppose someone asked the system a tricky riddle such as "which timepiece has the most moving parts?" The system might instantly answer "an hourglass." This would not occur by the system doing anything like thinking. The system would simply be retrieving an answer to that question it had already stored. And when you asked the system to write a program in Python that lists all prime numbers between 20,000 and 30,000, the system might simply find a closest match stored in its vast database of questions and answers, and massage the answer by doing some search and replace.

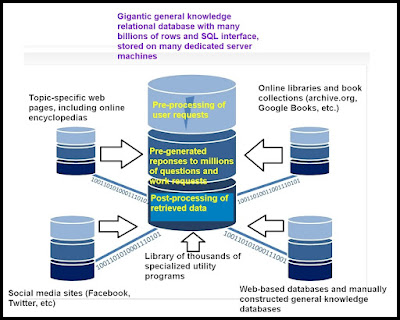

My speculations above are mainly educated guesses, based on my personal experience with the kind of technology typically used to implement big impressive software projects. The actual technology used by today's so-called "AI chatbots" may be different. But my suspicions about what is "under-the-hood" of such systems is probably correct at least in rough form, particularly my suspicion that 90% of what is going on is simply data processing powered by data extracted from online sources.

The use of the term "artificial intelligence" to describe such systems is not at all appropriate. Such systems are simply very large projects that leverage computer programming and massive amounts of data processing and data storage. I suspect that there hasn't been any real breakthrough in "artificial intelligence" from an algorithmic standpoint. But what has gone on very steadily for the past 40 years is a vast accumulation of data online and written information online, very much of it either in question-and-answer format or a format easily converted into question-and-answer format. According to the 2016 page here, the number of web pages indexed by Google increased 900% between 2008 and 2014, reaching 30 trillion by 2014. We can presume the same growth has occurred since 2014.

All of that vastly increasing online information was a resource capable of being exploited by any major corporation determined to leverage it by producing some "AI chatbot" system that would sound super-knowledgeable. For decades, relational database technology has been an extremely flexible and powerful technology for storing and retrieving information. Relational database technology has mostly been used in a very limited fashion (such as when a company creates a database storing its products, orders, customers and employees). There has always been a huge opportunity for creation of answer systems once some big corporation combined the huge amount of data online and the power of relational database technology. Such an exploitation is probably what powered the rise of search engines such as Google, and in ChatGPT we are mainly just seeing a different type of exploitation of the possibilities opened up when you have vast amounts of data online and ways of storing it and retrieving it as efficiently as you can with relational database technologies.

What is going on is almost entirely data processing and computer programming, with very little occurring that bears any resemblance to human thinking. But what about the ability of some "AI chatbot" to tell an appropriate story? Probably no "on-the-spot creativity" is occurring in such cases. I can imagine how you could get that to work. Scanning the internet, the system might find millions of stories, and create a million different "story templates." Actual names could be replaced with placeholders. So, for example, imagine the web crawler finds a story like this:

"Something interesting happened when my mom went to Chicago. She says that she got a nice hotel room downtown. But each night the lights would flicker several times. At first she thought that there might be some kind of ghost haunting the room. But when she told the hotel clerk about the problem, the clerk said, 'Don't worry, they were just doing some work on the electricity.' But on her last day when my mom left her room, a maid said, 'Oh, you were in Room 8C, did you see the lights flicker?' My mom said 'Yes.' The maid said, 'That only happens in Room 8C, probably because that's where a poor actor committed suicide, and we think the room is haunted.' "

Finding this story somewhere on the Internet, the web crawler used to make the "AI Chatbot" creates a story template, replacing specific names with placeholders like this:

"Something interesting happened when [PERSON X] went to [LOCATION Y]. She says that she got a nice hotel room downtown. But each night [SPOOKY OCCURRENCE]. At first she thought that there might be some kind of ghost haunting the room. But when she told the hotel clerk about the problem, the clerk said, 'Don't worry, they were just doing some work on the electricity.' But on her last day when [PERSON X] left her room, a maid said, 'Oh, you were in Room [ROOM NUMBER], did you see [SPOOKY OCCURRENCE]?' My mom said 'Yes.' The maid said, 'That only happens in [ROOM NUMBER], probably because that's where a poor [JOB HOLDER] committed suicide, and we think the room is haunted.' "

After finding this story by web crawling, the system would store this story template as one of a million story templates in its database, each of which would be categorized. This story might be categorized as a ghost story. Then if you ask the system, "Tell me a ghost story about Taylor Swift," the system might randomly select any of its story templates that had been categorized as a ghost story. You might get the story above, but with the PERSON X in the text above replaced with "Taylor Swift," and the LOCATION Y replaced with a random American city. With a million categorized story templates in its database, created by web crawling and looking for stories to be made into story templates, it is very easy for the "AI chatbot" to create a story of the type you requested. No actual creativity is involved. It's just data retrieval with a little search-and-replace.

A chatbot such as ChatGPT can also do summarization chores. You can ask it something like "Summarize the plot of the movie Casablanca so that a six-year-old can understand it." This would be easy for a system built along the lines I described. Among the datasets that the system would ingest are all of those listed on the page here. The datasets include 35 million Amazon reviews, 8.5 million Yelp reviews, 10,000 Rotten Tomato reviews, and so forth. While crawling the web and such datasets, the "AI chatbot" system would have found summaries of countless consumer products, books, TV shows, stories, songs and movies. The result might be a "Summaries" table with billions or hundreds of millions of rows. Answering a question such as the previous one would be a matter of simply retrieving one of those summaries, and then running it through some post-processing "simplification" filter that would do a search-and-replace operation so that simple words were used. So, for example, such a filter might replace "Nazis" with "the bad guys."

I must emphasize that whenever I refer to web crawling, I am talking about work done long before you typed in your "AI chatbot" prompt, to get the system ready so that it can respond very quickly to questions like the one you typed. Any "AI chatbot" system would need to be based on some massive database produced by months or years of web crawling done before the system was announced as a finished product.

There is a type of computer program called a utility program. A utility program is a piece of software that performs some very specific task, such as maybe simplifying a block of text or summarizing a block of text or counting all the verbs in a block of text or maybe making a block of text sound more professorial by replacing some of its simple words with fancy words. During the past forty years, computer programmers have created many, many thousands of different utility programs. There's almost a utility program for every task you might imagine. To help impress you, the "AI chatbots" such as ChatGPT have probably incorporated access to many thousands of different utility programs. When you type in some types of request, the "AI chatbot" probably just routes your request to one of countless utility programs it has at its disposal. The only thinking that is involved is the thinking that occurred when some utility software programmer wrote his program in the past.

Computer programmers and database experts have a thousand and one "tricks of the trade" that can be employed by some "AI chatbot" system. When a system uses very many of those tricks and also has a gigantic database to use for retrievals, one produced by many days, months or years of web crawling, you might get the impression that you're dealing with a mind that's really smart. You're not. You're just dealing with data processing, computer programming and a giant mountain of data that has been placed in a database that makes the data very easy to retrieve. The operations that are going on are usually nothing very brilliant: things like rudimentary preprocessing, database retrieval, and simple post-processing. 99% of the time nothing particularly brilliant is going on. Similarly, if you call up your dumb friend John, he might impress you as being very smart, if he answers your questions by doing Google searches and then reading the answers.

When people say that something like ChatGPT means that we should worry about artificial intelligence taking over the world, they are making ridiculous errors. Systems such as ChatGPT are simply big complex examples of computer programming and data processing. No real thinking or consciousness or insight or understanding is occurring. A system like ChatGPT is mainly an impressive bag of tricks and a well-organized mountain of data almost as big as the Internet.

AI is also used in game programs, such as chess. Interestingly, the oldest AI of which I am aware was a rudimentary chess-playing computer, using a very restricted board set-up. It was unbeatable. More interestingly yet, the computer had no software. Fantastically (because I ran out of variations on the word, interesting), the machine was made entirely out of electro-magnetic relays. Just as in your examples, no thinking was required by the AI. As soon as the human player pressed the buttons to make its move (from / to) the electric circuitry instantly connected in such a way as to make its responding move. For each from/to push of the buttons, there was only one possible response, because circuits were connected by on/off buttons. There could never be any variation to any combination of from/to. From A1 to B3 always connected to circuit XZ, which moved the computer's chess figures. Some modern chess software uses libraries of game positions such that, if the human makes a "fatal" mistake, the computer instantly identifies the response from the library of positions, and pounces, with no thinking involved.

ReplyDelete