Not wishing to build a castle floating in the air without any foundation, it will first be necessary to lay a solid foundation for this new theory. We can build that foundation by probing one of the most neglected and overlooked questions relating to nature. The question is: what, if any, are the computation requirements of nature? By this I mean: is it necessary that nature does some type of computation? If so, how much computation does nature need to do, and what elements of computation would nature need to satisfy such requirements?

The CRON Problem

Let's create an acronym to describe this problem. Let's call the problem the CRON problem. CRON stands for Computation Requirements of the Operations of Nature. We can define it like this:

--------------------------------------------------------

CRON Problem: Are there computation requirements in nature's operations -- things such as math that needs to be done, algorithms that need to be followed, or information that needs to be stored, retrieved or transferred? If so, roughly what is the level of computation that nature needs to do, and what elements of computation do we need to postulate to satisfy the computation requirements of nature?

--------------------------------------------------------

In the definition

above (and throughout this post) I use computation very broadly to

mean any phenomenon such as any operation involving calculation, the

execution of rules or instructions, or the manipulation or transfer

of information (as well as other additional actions that may be or

seem to be goal-oriented, rule-based, or algorithmic, or which seem

to methodically derive particular outputs from particular inputs, or

which seem to involve the use or storage of data). According to this

broad definition, your computer is doing computation when it is

calculating some number, and it is also doing computation when you

are doing a Google search or downloading a film.

The Biological Answer: There Definitely Are Computation Requirements, Which We Largely Understand

Let us first examine this CRON problem as it relates to biology. Imagine if you raised this CRON question to a biologist around 1930, asking him: are there computation requirements that nature must meet in order to perform the operations of biology?

I can easily imagine the answer the biologist might have given: “Don't be ridiculous. Computation is something done on pencil or paper, or by big bulky calculation machines. None of that goes on in the operations of biology.”

But we now know that this answer is false. Biology does require a large degree of computation, in the sense of information storage and information transfer. The main information storage medium is DNA. DNA uses a genetic code, which is a kind of tiny programming language. Every time a new organism is conceived and born, there's a transfer of information comparable to a huge data dump done by the IT division of a corporation. A gene is quite a bit like the complex variable known as a class in object-oriented languages, and each time a gene is used by an organism to create a protein, it is like the data processing operation known as instantiation.

This is a quite interesting example, because it shows a case of two things:

- There was actually a huge computation requirement associated

with a particular branch of nature (biology).

- This computation requirement was overlooked and ignored by

scientists in the field, who basically just failed to consider what

the computation requirements were in the processes they were

studying.

Does Nature Need to Compute to Handle Relativistic Particle Collisions?

First let's look at a case that may

seem quite simple at first: the case of two high-speed highly

energetic protons colliding together, at a speed that is a good

fraction of the speed of light (such a collision is called a

relativistic collision). This is the kind of thing that happened very

frequently in the early universe shortly after the Big Bang. It can

also happen in a huge particle accelerator such as the Large Hadron

Collider, where protons are collided together at very high speeds.

You might think that what happens in

such a case is that the particles simply bounce off each other, as

would happen if two fastball pitchers pitched fastballs at each

other, and the balls collided. But if the two protons collide at a

very high speed, a good fraction of the speed of light, something

very different happens. The two protons are converted to other

particles, perhaps many different particles. An example of this is

shown below.

Now in regard to computation

requirements, one might think at first that such a collision does not

require any computation, since the end result is just a completely

random mess. But actually nature follows an exact set of rules

whenever such collisions occur.

- The equation e = mc2

is followed to compute the available mass-energy that can be used to

create the output particles. (The actual “available energy” equation,

involving a square root, is a little more complicated, but it is

based on the e = mc2

equation.)

- Rules are followed in regard to the mass of the created particles. The created particles are always one of less than about 200 types (most short-lived), and each type of particle has some particular mass and some particular charge. It is as if nature has only a short list of allowed particles (each with a particular mass and charge), and nature only creates particles using that list, rather than just allowing particles of any old mass to be created (rather like a mother who only makes cookies by using a small set of cookie cutters). For example, we may see the creation of a particle with the mass of the electron, but never see a particle created with two or three or four times the mass of the electron. The stable particles that result from the collision (not counting antiparticles) are always either protons, neutrons, electrons, or neutrinos (or nuclei made from protons and neutrons).

- The collision follows a law called the law of the conservation of charge, which means that the total ratio of positive electric charge to negative electric charge is always precisely the same before and after the collision. This means, for example, that it is forbidden for you to have a collision of two protons (with a total of two positive electric charges) resulting in the creation of any set of particles that don't have a net total of two positive electric charges.

- The collision follows a law called the law of the conservation of baryon number, which means that the total baryon number of the incoming particles is the same as the total baryon number of the particles that result from the collision.

- The collision follows a law called the law of the conservation of lepton number, which puts further restrictions on the set of output particles that can appear as the result of the collision. This law is actually three laws in one, each relating to a particular type of lepton.

It

seems that a nontrivial amount of computation is required for all of

this to occur. If you doubt this, consider what would need to be done

if you were a computer programmer trying to write a program that

would simulate the results nature produces when two high-speed

relativistic protons collide at a large fraction of the speed of

light. You would need for your program to have some kind of list of

the allowed particles that could appear as output particles, a list

of less than about 200 possibilities, that would include the proton,

the electron, the neutron, mesons, the muon, a few other particles,

and their antiparticles (along with the masses and charges of each).

Your program would have to compute the available energy for output

particles, using an equation that would make use of a constant you

had declared in your program representing the speed of light. Your

program would also have to include some elaborate computation

designed to calculate a set of output particles (derived using the

list of particles) that satisfy the available energy limits and also

the requirements of the law of the conservation of baryon number,

the law of the conservation of charge, and the law of the

conservation of lepton number. This would require quite a bit of

programming to accomplish. It would probably take the average skilled

programmer several days of programming to produce the required code

to compute such a thing. It could easily take the programmer weeks to

produce code that would perform these computations more or less

instantaneously, without using a loop that uses a trial-and-error

iteration.

So

we seem to have come to a very interesting result here. It would seem

that significant computation is indeed required by nature to handle

events such as relativistic proton collisions.

One

can imagine a simpler universe in which high-speed protons always

simply bounce off each other when they collide, like two baseballs

which collide after being pitched by two fastball pitchers aiming at

each other. That simple behavior might not require any computation by

nature, but the very different type of behavior that happens in our

universe when relativistic protons collide (involving a whole series

of complicated rules that must be rigidly followed) does seem to

require computation.

The

creation of particles that occurs in a relativistic particle

collision is actually strikingly similar to the instantiation of

objects (using a class) that occurs in object-oriented software

programming. Explaining this point would take several paragraphs, and

I don't want this lengthy post to get too long; so I will leave this

explanation for a separate future blog post on this topic.

The preceding case may disturb someone

who doesn't want to believe that nature needs to compute. But such a

person may at least comfort himself by thinking: that's just a freak

case; nowadays those relativistic particle collisions only occur in a

few particle accelerators.

But now let us look at more general

operations of nature, those involving the four fundamental forces of

nature. Let us ask the question: does nature need to do computation

in order to handle the fundamental forces that allow us and our

planet to exist from day to day?

The four fundamental forces in nature

are the strong nuclear force, the weak nuclear force, the

electromagnetic force, and the gravitational force.

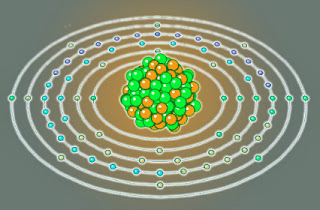

The strong nuclear force is the

glue-like force that holds together the protons and neutrons in the

nucleus of an atom. The range of this force is extremely small, so we

don't seem to have a very obvious case here that a large amount of

computation needs to be done for the force to work (although once we

got into all the very complex calculations needed to compute the

strong nuclear force, we might think differently).

The weak nuclear force is the

fundamental force driving radioactivity. An interesting aspect of

radioactivity is its random nature. It would seem that if you were to

write a computer program simulating the behavior of radioactive

particles, you would need to make use of a piece of software

functionality called a random number generator. So it could be that

nature does need to do some type of computation for the weak nuclear

force to occur, but this is not at all the most compelling case where

natural operations seem to require programming.

When we come to the gravitational

force, we have a very different situation. It seems that for nature

to handle gravitation as we understand it, insanely high amounts of

computation are required.

This may seem surprising to someone

familiar with the famous formula for gravitation, which is quite a

simple formula. The formula is shown below:

In this formula, F is the

gravitational force, G is the gravitational constant, m1 is the first

mass, m2 is the second mass, and d is the distance between the

masses.

Now looking at this formula, you may

think: it looks like nature has to do a little calculation to

compute gravity, but it's not much, so we can just ignore it.

To actually fully compute the

gravitational forces acting on any one object or particle in the

universe, we must do an almost infinitely more expensive

calculation-- a calculation that must involve the mass of all other

objects in the universe. This is because gravitation is an inverse

square law with an infinite range. Every single massive object in

the universe is exerting a gravitational force on you, and every

other massive object.

I may note the very interesting fact

that not one physicist in the history of science has ever done one

billionth of the work needed to completely compute the complete

gravitational forces attacking on any single particle or object.

To illustrate the computation

requirement to calculate the gravitational forces acting on a single

object, I can write a little function in the C# programming

language:

void

ComputeGravitationalForcesOnParticle (particle oParticleX)

{

foreach (particle oParticleY in

Universe)

{

double dForce =

0.0;

double

dTemp = 0.0;

dTemp = (oParticleX.Mass *

oParticleY.Mass) /

ComputeDistanceBetweenParticles(oParticleX,

oParticleY);

dForce = GravitationalConstant * dTemp;

ApplyForce(dForce,

oParticleX);

}

}

Now that's quite a bit of computation

required. But for nature to do all the work needed to compute the

gravitational forces on all particles in the universe during

any given instant, it needs to do vastly more work than to just do

the equivalent of running this function that is so expensive from a

computational standpoint. Nature has to do the equivalent of a double

loop, in which this loop is just the inner loop. To represent this in

the C# language, we would need code something like this:

foreach

(particle oParticleX in Universe)

{

foreach (particle oParticleY in Universe)

{

double dForce = 0.0;

double

dTemp = 0.0;

dTemp

= (oParticleX.Mass * oParticleY.Mass) /

ComputeDistanceBetweenParticles(oParticleX,

oParticleY);

dForce

= GravitationalConstant * dTemp;

ApplyForce(dForce, oParticleX);

}

}

In any case in which you are

calculating, say, once every second rather than a tiny fraction of a

second, the calculation would have to be much more complicated, as

it would have to take into account the relative motion between objects.

This makes it pretty clear that nature

does indeed need to compute in order for gravitation to occur. It

would seem that the computational requirements of gravitation are

insanely high.

Does Nature Need to Compute to Handle the Electromagnetic Force?

The electromagnetic force is the force

of attraction and repulsion between charged particles such as protons

and neutrons. Like gravitation, the electromagnetic force is an

inverse square law with infinite range. The basic formula for the

electromagnetic force is Coulomb's law, which looks very similar to

the basic formula for gravitation, except that it uses charges rather

than masses, and a different constant. The formula is shown below:

In this formula F is the

electromagnetic force of attraction or repulsion, k is a constant, qa

is the first charge, qb

is the second charge, and r is the distance between them.

The computational situation in regard

to the electromagnetic force is very similar to the computational

situation in regard to gravitation. Just as the formula for

gravitation gives you only the tiniest fraction of the story (because

it gives you a formula for calculating only the gravitational

attraction between two different particles), Coulomb's law gives you

only the tiniest fraction of the story (because it only gives you a

formula for calculating the electromagnetic force between two

particles). Since the range of electromagnetism is infinite, to fully

compute the electromagnetic forces on any one particle or object requires that

you take into account all other charged particles in the universe.

If you were to write some C# programming code that gives the needed

calculations to compute the electromagnetic forces acting on all

particles in the universe, it would have to have an insanely

expensive nested loop like the one previously described for

gravitation. The code would be something like this:

for

each (particle oParticleX in Universe)

{

foreach

(particle oParticleY in Universe)

{

double dForce = 0.0;

double

dTemp = 0.0;

dTemp

= (oParticleX.Charge * oParticleY.Charge) /

ComputeDistanceBetweenParticles(oParticleX,

oParticleY);

dForce

= CouplingConstant * dTemp;

ApplyForce(dForce,

oParticleX, eForceType);

}

}

In this case the outer part of the loop must be traversed about 1080 times, which is ten thousand billion trillion quadrillion quintillion sextillion iterations. But each such iteration requires running the inner part of the loop, which also must be run 1080 times. So the total number of iterations that must be run is 10160 which is a ten thousand billion trillion quadrillion quintillion sextillion times greater than the total number of particles in the universe.

It seems, then, that nature's

computational demands required by the electromagnetic force are

incredibly high.

I may note that there is a

long-standing tradition of representing the charge of the electron as

negative, and the charge of the proton as positive. But because the

total number of attractions involving electrons is apparently equal

to the total number of repulsions involving electrons, as far we can

see (something that is also true for protons), there is no physical

basis for this convention. When we get rid of this “cheat” that

arose for the sake of mathematical convenience, we see that nature

seems to have an algorithmic rule-based logic that it uses in

electromagnetism, reminiscent of “if/then” logic in a computer

program; and this logic differentiates between protons, neutrons and

electrons. I will explain this point more fully in a future blog

post.

According to quantum mechanics, every

particle has what is called a wave function. The wave function

determines the likelihood of the particle existing at a particular

location. You can get a crude, rough analogy of the wave function if

you imagine a programming function that takes a few inputs, and then

produces as an output a scatter plot showing a region of space, with

little dots, each representing the chance of the particle being in a

particular place. The more dots there are in a particular region of

space, the higher the likelihood of the particle existing in that

region. (The wave function is actually a lot more complicated that

this, but such an analogy will serve as a rough sketch.)

But in order to do the full complete

calculation of the wave function, this “scatter plot” must be

basically the size of the universe. According to quantum mechanics,

the wave function is actually “spread out” across the entire

universe. This means that while the wave function is computing a very

high likelihood that a particular electron now exists at a location

close to where it was an instant ago, the wave function is also

computing a very small, infinitesimal likelihood that the same

electron may next be somewhere else in the universe, perhaps far, far

away. Since the Pauli Exclusion Principle says two particles can't

exist in the exact same spot with the same characteristics, this wave

function calculation therefore must apparently take into

consideration all the other particles in the universe.

Here is a quote from Cal Tech

physicist Sean Carroll:

In quantum mechanics, the wave

function for a particle will generically be spread out all over the

universe, not confined to a small region. In practice, the

overwhelming majority of the wave function might be localized to one

particular place, but in principle there’s a very tiny bit of it at

almost every point in space. (At some points it might be precisely

zero, but those will be relatively rare.) Consequently, when I change

the electric field anywhere in the universe, in principle the wave

function of every electron changes just a little bit.

Again, we have a case where the

computation requirements of nature seem to be insanely high. Every

second nature seems to be computing the wave function of every

particle in the universe, and the complete, full computation of that

wave function requires an incredibly burdensome calculation that has

to take all other particles in the universe into consideration.

I may also note that the very concept

of the wave function – a function that takes inputs, and produces

an output-- is extremely redolent of software and computation.

Computer programs are built from functions that take inputs and

produce outputs.

The case of empty space between the

middle of stars may seem at first a case that requires absolutely

zero computation by nature. After all, empty space between stars is

just completely simple nothingness, right? Not quite. Quantum

mechanics says that there is a vacuum energy density, and that the

empty space between stars is a complex sea of virtual particles that

last only for a fraction of a second, popping in and out of

existence.

The mathematics required to compute

this vacuum energy density is extremely complicated. Strangely

enough, when physicists tried to calculate the vacuum energy density,

they found at first that it seems to require an infinite

amount of calculation to compute the energy density of the vacuum. So

they resorted to a kind of trick or cheat called renormalization,

which let them reduce the needed computation to a finite amount

(Richard Feynman, the pioneer of renormalization, once admitted that

it is “hocus pocus.”)

Even after this cheat, the required

calculation is still ridiculously laborious, as it requires

calculating contributions from many different particles, fields, and

forces. The calculation required to compute the energy density of one

cubic centimeter is very complicated and computationally expensive.

Then that amount must be multiplied by more than a billion trillion

quadrillion if we are to estimate nature's total computation

requirements for calculating the vacuum energy density of all of the

cubic centimeters in all of the empty spaces of our vast universe. So

again we find a case where the computation requirements of nature

seem to be very, very high.

A quantum jump is one of the strangest

things in quantum mechanics. A quantum jump occurs when an electron

jumps from one orbit in an atom to another (or more strictly, from

one quantum state to another). A quantum jump is typically triggered

when an energetic photon strikes the electron. The following very

crude diagram illustrates the idea. It shows an electron being struck

by a photon of energy, with the electron jumping to a new orbital

position. (I am speaking a bit schematically here.)

A quantum jump

However, the

actual jump does not occur as a journey from one orbit to another, as

shown in this crude visual The jump occurs as an instantaneous

transition from one orbit to another (or more precisely, from one

quantum state to another). The opposite of the process depicted

above also frequently happens. An electron will jump to an orbit

closer to the nucleus, causing a photon to be emitted.

Now, in physics

there is a very important law saying that in an atom no two electrons

can have the same quantum state. This law is known as the Pauli

Exclusion Principle. What this roughly means is that no two electrons

with the same spin can have the same orbital state.

Imagine an atom with many electrons

having many different orbits. In such a case a photon may strike an

electron, causing it to jump to a new orbital position. But if the

atom already has many electrons, the jump must occur in a way that

obeys the Pauli Exclusion Principle. Depending on the intensity of

the photon, the electron might have to jump over numerous different

orbits, finding a slot for it to jump to that is compatible with the

Pauli Exclusion Principle.

A complex atom

For example, a photon might hit an

electron in one of the inner orbits in an atom like the one depicted

above, causing it to jump to one of the outer orbits (the distance

would depend on how energetic the photon was).

But in this case the electron does not

“try” various orbital positions, ending up in the first one that

is compatible with the Pauli Exclusion Principle. Instead the

electron instantaneously jumps to the first available orbital

position (consistent with the photon energy) that satisfies the Pauli

Exclusion Principle.

Now the question is: how does the

electron know exactly the right position to instantaneously jump to

in this kind of complicated situation? This is basically the same

question that was asked by Rutherford, one of the great atomic

physicists.

This seems to be a case where nature

has to do computation, both to recall and apply the complicated law

of the Pauli Exclusion Principle, and also to compute the correct

position (consistent with the Pauli Exclusion Principle) for the

electron to relocate. The whole operation seems rule-based and

programmatic, and the quantum jumps resemble the “variable

assignment” operations that occur within programming code (in which

a variable instantaneously has its value changed).

Upon looking for further cases where

nature seems to be performing like software, we might look at the

famous double-slit experiment (in which electrons behave in a way

that may suggest they are being influenced by some mysterious

semi-cognizant rule). But let us instead look at an even more

dramatic example: the phenomenon of quantum entanglement.

An example of quantum entanglement is

shown in the illustration here. Particle C is a particle that decays

into two daughter particles, A and B. Until someone measures the spin

on either of these two particles, the spin of each of the daughter

particles is indeterminate, which in quantum mechanics is a kind of

fuzzy “not assigned yet” state (it might also be conceived as a

combination of both possible spin states of Up and Down). It's

rather like the same idea that one sees in a probability cloud

diagram of an atom, showing an electron orbital, where rather than

saying that the electron has an exact position we say that the

electron's position is “spread out” throughout the probability

cloud. Now, as soon as we measure the spin of either particle A or

particle B, the spin becomes actualized or determined (one might may

assigned, speaking as a programmer), and the other daughter

particle then has its previously indeterminate spin become

actualized, to a value that is the opposite of the spin value of the

first particle. This effect has been found to not be limited by the speed

of light, and seems to occur instantaneously.

Example of Quantum Entanglement

Does this effect seem to require

computation? Indeed, it does. The phenomenon of quantum entanglement

seems to require that nature has some database or data engine that

links together each pair of entangled particles, so that nature can

keep track of what particle or particles are associated with any

particular entangled particle (rather in the same way that external

databases keep track of which persons are your siblings or

co-workers). Since physicists believe that quantum entanglement is

not some rare phenomenon, but is instead occurring to a huge extent

all over the universe, the total amount of computation that must be

done seems to be immense.

This type of quantum entanglement

effect bears no resemblance to anything we see in the macroscopic

world, but something like this effect can be easily set up within a

relational database. Using the SQL language I can easily set up a

database in which particular objects can be inversely correlated.

The database might be created with SQL statements something like

this:

create

table Particles

begin

ID1

int,

spin

int,

mass

decimal,

particle_type

char(10)

end

go

create

table Correlated_Particles

begin

ID1

int,

ID2

int

end

go

Now given such an arrangement of data,

I could establish the inverse correlation by simply writing what is

called an update trigger on the Particles table, which is a piece of

code that is run whenever an item in that table is updated. This

update trigger could have a few lines of code that checks whether a

row in the Particles table has a match in the Correlated_Particles

table. If such a match is found, another row in the Particles table

is updated to achieve the inverse correlation. The code would look

something like this:

CREATE

TRIGGER Inverse_Correlation

ON

Particles

AFTER

UPDATE

AS

declare

@ID int

IF

( UPDATE (spin) )

BEGIN

select

@ID = ID2 from Correlated_Particles where

ID1

= updated.ID1

if

(@ID is not null)

update

Particles set spin = updated.spin * -1 where ID1 = @ID

END

GO

So we can achieve this strange

effect of instantaneous inverse correlation, similar to quantum entanglement;

but we need a database and we need some software, the code in the

update trigger. To account for quantum entanglement in nature, we

apparently need to postulate that nature has something like a data engine and

some kind of software.

Upon hearing these arguments, many

will take a position along these lines: “Well, I guess nature does

seem to be doing a great deal of computation, or something like

computation. But we should not then conclude that the universe has

any software, or any computing engine, or any data engine.” Such

thinking kind of defies the observational principle that virtually

all computation seems to require some kind of software, computation

engine, and data engine (as suggested by the graphic below, which

compares common elements of two very different types of computation,

a paper and pencil math calculation and an online Amazon order).

Elements Required for Complex Computation

I will give a name for the type of

thinking described in the previous paragraph. I will call it the

Magical Infinite Free Computation Assumption, or MIFCA. I call the

assumption “magical” because all known computation involves some

kind of software (or something like software) or some kind of

computation engine or some type of data engine. To imagine that the

universe is doing all this nearly infinite amount of computation

every second without any software and without any computing engine

and without any data engine is to magically imagine that nature is

getting “for free” something that normally has a requirements

cost (a cost in terms of the necessity of having a certain amount of

software, a required computation engine, and a required data engine).

We can compare this type of MIFCA

thinking to the thinking of a small child in regard to his parents'

spending. A very young child may see his wealthy parents spending

heavily all the time, and may generalize a rule that “My parents

can buy whatever they want.” But such behavior actually has

implications – it implies that perhaps one or both of the parents

get a regular paycheck that gives them money, or that the parents

have a bank account that stores their savings. But the small child

never thinks about such implications – he just simply thinks, “My

parents can buy whatever they want.” Similarly, the modern

scientist may think that nature can do unlimited computation, but he

fails to deduce the implications that follow: the fact that such

computation implies the existence of an associated software and

computation engine and data engine.

Although this type of MIFCA assumption

may be extremely common, it does not make sense. To imagine that the

universe does a nearly infinite amount of rule-based computation

every second but without any software and without any computation

engine and without any data engine is rather like imagining that

somewhere there is a baseball game being continuously played but

without any playing field and without any baseball and without any

bases and without any baseball players.

These examples (and many others I

could give) suggest that nature does require a high degree of

computation for the phenomena studied by physicists. We are led

then, dramatically, to a new paradigm. In this paradigm we must

assume that software is a fundamental aspect of the universe, and

that in all probability the universe has some type of extremely

elaborate programming, computing engine and data engine that is

mostly unknown to us. (By using the term “engine” I simply some

type of system, not necessarily a material one.)

I will call this theory the theory of

a programmed material universe. I choose this name to

distinguish such a theory from other theories which claim that the

universe is just a Matrix-style illusion or that the universe is a

simulation (perhaps one produced by alien programmers). I reject such

theories, and argued against them in my blog post Why You Are Not Living in a Computer Simulation. The theory of a programmed

material universe assumes that our universe is as real as we have

always thought, but that a significant element of it is a computation

layer that includes software. (I may note that the word “material”

in the phrase “programmed material universe” is merely intended

to mean “as real as anything else,” and does not necessarily

imply any assumption about the nature of physical matter.)

The fact that we do not understand the

details of such a cosmic computation layer should not at all stop us

from assuming that it exists. Scientists assume that dark matter

exists, but purely because they think it is needed to explain other

things, not because there is any unambiguous direct observational

evidence for dark matter. If there are sound reasons for assuming the

universe must have software and a computation engine and a data

engine, then we should assume that it does, regardless of whether we

know the details of such things.

An assumption that software is a key

part of the universe also does not force us to believe in the weighty

assumption that the universe is self-conscious. The software we are

familiar with has a certain degree of intelligence or smarts, but it

is not self-conscious. I can write a program in two hours that has

some small degree of intelligence or smarts, but the program is no

more self-conscious than a rock. So we can believe that the universe

has a certain degree of intelligence or smarts in its software,

without having to believe that the universe is self-conscious.

An assumption that software is a key

part of the universe also does not force us to the simplistic

conclusion that “the universe is a computer.” A more appropriate

statement would be along these lines: the universe, like a Boeing

747, is a complicated system going towards a destination; and in both

cases software and computation are key parts of the overall system.

It would be inaccurate to say that a Boeing 747 is a computer, but it

would be correct to say that software and a computing system are

crucial parts of a Boeing 747 (used for navigation and whenever the

plane flies on autopilot). Similarly we can say that software and

computation are crucial parts of the universe (but we should not say

“the universe is a computer.”)

One way to visualize this theory of a

programmed material universe is to imagine the universe consisting of

at least two layers – a mass energy layer and a computation layer,

as schematically depicted below. We should suppose the computation

layer is vastly more complicated than the simple flowchart shown in

this visual. We should also suppose that the two layers are intertwined, rather than one layer floating above the other layer. If you ask "Which layer am I living in?" the answer is: both.

One Way to Visualize the Theory

The argument presented here (involving

the computational requirements of nature) is only half of the case

for the theory of a programmed material universe. The other half of

the case (which I will explain in my next blog post) is the

explanatory need for such a theory in giving a plausible narrative of

the improbable events in the history of the universe. The universe

has undergone an astounding evolution from a singularity of infinite

density at the time of the Big Bang, achieving improbable and

fortunate milestones such as the origin of galaxies, the formation of

planets with heavy elements, the origin of life, and the origin of

self-conscious Mind. Such an amazing progression is very hard to

credibly explain outside of a theory of a programmed material

universe, but such a progression is exactly what we would expect if

such a theory is true (partially because software can be goal-oriented and goal seeking). Read my next blog post for a full explanation

of this point.

No comments:

Post a Comment