Claims that neuroscientists make about brains, minds and memory are mainly based on experiments with animals. This is largely because of the moral restrictions against doing brain experiments on human subjects. With a rat you can do something like open up its brain and scan some of its cells to look for signs of brain changes caused by learning, or you can do something like remove part of the rat's brain to see whether that affects learning. But you can't do those kind of experiments with a human without engaging in behavior that would be widely condemned.

You do not need any survey of animal cognition researchers to know that the field of animal cognition research is in a sick state. You can do that by making a critical analysis of the papers published by such researchers. You will find a very large prevalance of Questionable Research Practices and other serious problems. Among the problems are these:

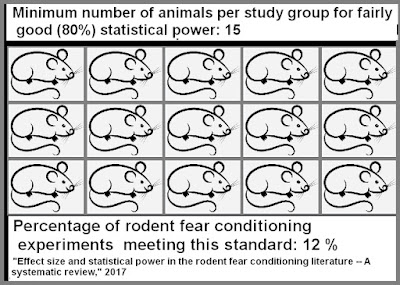

(1) Researchers routinely do experiments with insufficient sample sizes, very often using study group sizes smaller than 15 subjects. !5 subjects per study group is the minimum needed for a moderately reliable result, and any study using fewer than 15 subjects in any of its study groups will have a very high chance of producing a false alarm.

(2) Researchers routinely fail to show evidence that their experiments followed a blinding protocol designed to reduce experimenter bias under which a researcher will "see whatever he wants to see." Usually no mention will be made of of any blinding procedure, and the word "blind" or "blinding" will not even appear in the paper describing the research. In the minority of cases in which some mention is made of blinding, the mention will be some half-hearted mention of some fragmentary effort at a blinding protocol. Almost never will we read a discussion of how a detailed plan was made to implement a thorough blinding protocol, and a discussion of how such a plan was followed.

(3) The overwhelming majority of animal cognition research papers will fail to show any evidence that a detailed hypothesis was chosen before an experiment began, and that the scientific paper reported on whether the pre-selected hypothesis succeeded or failed. The overwhelming majority of such papers will not be pre-registered papers in which the authors chose (before gather data) a hypothesis to be tested, how data would be gathered, and how data would be analyzed. Instead the great majority of papers will give the impression of using "fishing expedition" techniques in which data is gathered, and then the researchers were free to "slice and dice" the data in innumerable ways, trying different data analysis methods until there turned up what could be called some marginal evidence for any hypothesis that the researchers might have dreamed up after gathering data.

(4) In some cases bad experimental methods have become a tradition. For example, the reigning tradition among animal cognition researchers is to try to measure fear in rodents by making estimates of "freezing behavior." Such a subjective and unreliable method of judging fear is far less reliable than measuring heart rate, which reliably undergoes a very sharp spike when rodents are afraid.

(5) Researchers very often make claims in the title or abstracts of their papers that are not justified by any research described in their papers. They sometimes confess to doing this. At a blog entitled "Survival Blog for Scientists" and subtitled "How to Become a Leading Scientist," a blog that tells us "contributors are scientists in various stages of their career," we have an explanation of why so many science papers have inaccurate titles:

"Scientists need citations for their papers....If the content of your paper is a dull, solid investigation and your title announces this heavy reading, it is clear you will not reach your citation target, as your department head will tell you in your evaluation interview. So to survive – and to impress editors and reviewers of high-impact journals, you will have to hype up your title. And embellish your abstract. And perhaps deliberately confuse the reader about the content."

The European Journal of Neuroscience published an editorial entitled "Getting published: how to write a successful neuroscience paper." The editorial emphasized that the title and abstract of a neuroscientist paper need to be "enticing," and suggested the use of the active voice, using the example of saying that neurons signal something about memory. We can only guess at how many neuroscience papers have been given misleading and inaccurate titles because neuroscientists are being advised to use "enticing" titles for their papers, and urged to use the active voice in referring to mindless and passive chemicals and cells.

Recently there was published a paper which gives us a different way of detecting the sick state of animal cognition research. Entitled "The hidden side of animal cognition research: Scientists’ attitudes toward bias, replicability and scientific practice," the paper was a survey of scientists doing animal cognition research. 210 researchers filled out the survey. Collectively their answers are an indictment of the dysfunctional state of animal cognition research.

When asked about bias in their experiments, nearly 80% of researchers confessed that they found themselves often or sometimes hoping for some particular result in their study. When we have such a level of bias and a failure of most animal cognition research papers to follow a blinding protocol to reduce bias, we have basically a sure-fire recipe for unreliable results caused by unmitigated experimenter bias. When asked whether the results and theories in their area of animal cognition research are strongly affected by the biases of researchers, more of the experimenters agreed than disagreed. When asked whether the results and theories in other areas of animal cognition research are strongly affected by the biases of researchers, far more of the experimenters agreed than disagreed, with 46% agreeing, and only 15% disagreeing.

When asked about overstating claims in papers (making claims in a paper that are not justified by the research), 7.7% of researchers confessed to making stronger claims than warranted. Remembering the fact that only a tiny fraction of people confess to wrongdoing they have done, we should assume that the actual number of animal cognition researchers making stronger claims than warranted is many times higher than this 7.7%. Indeed, when asked about the practices of other researchers in animal cognition, 56% of the respondents said that other researchers in animal cognition made stronger claims than warranted.

When asked about what percent of their experimental studies have been published or will be published, the average response was 80%. This suggests there is a very large problem that studies producing null results will tend not to be published. So, for example, if a researcher produces a result that does not support prevailing neuroscientist dogmas about brains storing memories, such a study will simply end up in a file drawer without being published.

Researchers in animal cognition confessed to a rather low confidence in the statistical analysis in their papers. When asked whether they "somewhat agree" or "strongly agree" that the statistical analysis in their own papers is valid, 42.9% said that they merely "somewhat agree" rather than "strongly agree." Since this is a self-confession question and since we would expect that only a small fraction of the researchers who doubt their own statistical analysis would confess to having doubt, we may assume that it is actually the great majority of animal cognition researchers who lack strong confidence in their statistical analysis. There are strong reasons for suspecting that extremely dubious statistical analysis is more the rule rather than the exception in animal cognition research. One respondent stated, "The majority of animal cognition researchers have a very sparse statistical education," and that this can be "a huge potential for errors."

Why is there so much arcane statistical analysis in neuroscience papers? It's because typically experimenters get results providing no good evidence for the incorrect dogmas they are trying to support. Then our researchers very often decide to keep playing around with statistical analysis until the data seems to provide some faint whisper sounding a bit like the desired effect. There's an old expression: if you torture the data sufficiently, it will confess to anything.

When asked how often Questionable Research Practices occur in their own research, 27% of animal cognition researchers confessed that such QRP practices occur "sometimes," "often" or "always." Since this is a self-confession question in which we would expect that only a small fraction of the researchers engaging in Questionable Research Practices would confess to doing so, we should assume that the actual percentage of such researchers engaging in Questionable Research Practices is far higher than 50% (an assumption very much warranted from reading the papers of such researchers). When asked about how often Questionable Research Practices occur in the research of other animal cognition researchers, 52.7% of the respondents said that such practices occur "sometimes," and 28.9% of the respondents said that such practices occur "often" or "always."

When asked about replication, 73% of the respondents agreed that some areas of animal cognition research would experience a replication crisis if attempts to replicate most of its studies were attempted. This amounts to a confession of large-scale unreliability in animal cognition research.

How would things work if experimental science was being done properly? For one thing, there would be some central repository in which scientists could (without any restriction) do things such as (1) publish a research protocol for an upcoming experiment, which would amount to a public promise to test one particular hypothesis, and to gather and analyze data in a particular way, and (2) concisely report the results (null or not) of particular experiments, regardless of whether any paper on such a result got published. But the animal cognition survey research paper states that no such repository even exists. We read this:

"While there is currently no central repository or systematic method for study registration (c.f. https://clinicaltrials.gov/ for medical trials), research groups could seek to publicly archive all studies they conduct, which would allow other researchers to assess the strength of evidence not just from individual studies, but in relation to the entire research programme they have come from."

So our science community does not even have one of the most basic tools it should have to do research in a competent way. How lame is that?

What the very illuminating "hidden side of animal cognition research" study reveals is that animal cognition research is in a sick and dysfunctional state. We should remember this every single time a scientist claims that memories are stored in brains and that brains produce minds. Such claims are mostly based on animal cognition research, which is a diseased and dysfunctional branch of research that is a not a reliable pillar for anything. There is no robust evidence for any of the main claims of neuroscientists. We have no good evidence from either animals or humans that brains store memories or that brains are the source of human mental effects such as self-awareness, thinking, instantaneous memory recall, imagination, understanding and self-hood. To the contrary, low-level research on brains very frequently provides us with strong reasons for rejecting all such claims, revealing the brain and its synapses as too slow, unreliable, noisy and unstable to be the source of the human mind and its abilities.

No comments:

Post a Comment