Let

us take a very close look at some important laws of nature. When you

go to the trouble of looking very closely at these laws, you may end

up being stunned by their seemingly programmatic aspects, and you may end up

getting some insight into just how apparently methodical and

conceptual the laws of nature are.

The

laws I refer to are some laws that are followed when subatomic particles collide

at high speed. In recent years scientists at the Large Hadron

Collider and other particle accelerators have been busy smashing

together particles at very high speeds. The Large Hadron Collider is

the world's largest particle accelerator, and consists of a huge

underground ring some 17 miles wide.

The

Large Hadron Collider accelerates protons (tiny subatomic particles)

to near the speed of light. The scientists accelerate two globs of

protons to a speed of more than 100,000 miles per second, one glob

going in one direction in the huge ring, and another glob going in

the other direction. The scientists then get some of these protons to

smash into each other.

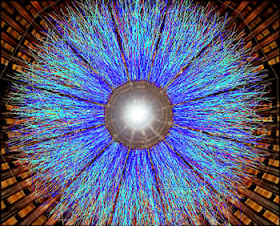

A

result of such a collision (from a site describing a different

particle accelerator) is depicted below. The caption of this image

stated: “A

collision of gold nuclei in the STAR experiment at RHIC creates a

fireball of pure energy from which thousands of new particles are

born.”

Such

a high-speed collision of protons or nuclei can produce more than 100

“daughter particles” that result from the collision. The daughter

particles are rather like the pieces of glass you might get if you

and your friend hurled two glass balls at each other, and the balls

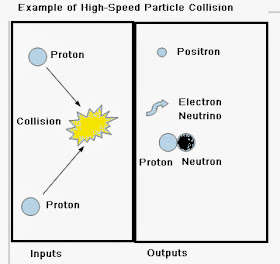

collided (please don't ever try this). Here is a more schematic

depiction of a one of the simplest particle collisions (others are

much more complicated):

The

results of a collision like that shown in the first image may seem

like a random mess, but nature actually follows quite a few laws when

such collisions occur. The first law I will discuss is one that there

is no name for, even though there should be. This is the law we might

call the Law of the Five Allowed Stable Particles. This is simply

the law that the stable long-lived output particles created from any

very high-speed subatomic particle collision are always particles on the following short list:

| Particle |

Rest Mass

|

Electric Charge |

| Proton |

1.67262177×10−27 kg |

1.602176565×10−19

Coulomb

|

| Neutron |

1.674927351 ×10−27 kg |

0 |

| Electron |

9.10938291 ×10−27 kg |

-1.602176565×10−19

Coulomb

|

| Photon |

0 |

0 |

| Neutrino |

Many times smaller than electron mass |

0 |

I

am not mentioning antiparticles on this list, because such particles

are destroyed as soon as they as come in contact with regular

particles, so they end up having a lifetime of less than a few

seconds.

This

Law of the Five Allowed Stable Particles is not at all a trivial law,

and raises the serious question: how is it that nature favors only

these five particles? Why is it that high-speed subatomic particle collisions

don't produce stable particles with thousands of different random masses and thousands of different random electric charges?

It is as if nature has inherent within it the idea of a proton, the

idea of an electron, the idea of a neutron, the idea of a photon, and

the idea of a neutrino.

When

particles collide at high speeds, nature also follows what are called

conservation laws. Below is a table describing the conservation laws

that are followed in high-speed subatomic particle collisions. Particles with positive

charge are shown in blue; particles with

negative charge are shown in red; and

unstable particles are italicized (practically speaking,

antiparticles are unstable because they quickly combine with regular

particles and are converted to energy, so I'll count those as

unstable particles). The

particles listed before the →

symbol are the inputs of the collision, and the particles after the →

symbol are the outputs of the collision. The → symbol basically

means “the collision creates this.”

| Law |

Description |

Example of particle

collision or decay allowed under law |

Example of particle

collision or decay prohibited under law |

law

of the conservation of mass-energy

|

The mass-energy of the

outputs of a particle collision cannot exceed the mass-energy of

the inputs of the collision |

proton

+ proton →proton+neutron

+ positron+electron

neutrino |

electron+electron

→antiproton+

electron

(prohibited because an antiproton is almost a thousand times more

massive than two electrons) |

law

of the conservation of charge

|

The ratio between the

proton-like charges (called “positive” and shown here in blue)

and the electron-like charges (called “negative” and shown

here in red) in the outputs of a

particle collision must be the same as the ratio was in the inputs

of the collision |

proton

+ proton →proton+neutron

+ positron

+electron neutrino (two proton-like charges in

input, two proton-like charges in output)

At

higher collision energies:

proton

+ proton →proton+proton+

proton+antiproton

|

proton

+ proton

→proton+neutron

+electron+electron neutrino (two

proton-like charges in input, only one proton-like charge in

output) |

law

of the conservation of baryon number

|

Using the term “total

baryon number” to mean the total of the protons

and neutrons (minus the total of the antiprotons

and antineutrons), the total baryon

number of the stable outputs of a particle collision must be

the same as this total was in the inputs of the collision |

proton

+ proton →proton

+neutron + positron+electron

neutrino (total baryon number of 2 in inputs, total baryon number

of 2 in the outputs) |

proton

+ neutron →proton+muon

+ antimuon

(total baryon number of 2 in inputs, total

baryon number of 1 in the outputs) |

law

of the conservation of lepton number (electron number “flavor,”

there also being “flavors” of the law for muons and tau

particles)

|

Considering electrons

and electron neutrinos to have an electron number of 1, and

considering a positron

and anti-neutrinos (including the

anti-electron neutrino) to have an electron number of -1,

the sum of the electron numbers in the outputs of a

particle collision must be the same as this sum was in the inputs

of the collision |

neutron→proton

+electron+anti-electron

neutrino (total electron number of inputs is 0, net electron

number of outputs is 0) |

neutron→proton

+electron

(total electron number of inputs is 0, but net electron number of

outputs is 1) |

Each

of the examples given here of allowed particle collisions is only one

of the many possible

outputs that might be influenced by the laws above. When you have very high-energy particles colliding, many output particles can

result (and nature's burden in following all these laws becomes

higher).

Now

let us consider a very interesting question: does nature require

something special to fulfill these laws – perhaps something like

ideas or computation or figure-juggling or rule retrieval?

In

the case of the first of these laws, the law of the conservation of

mass-energy, it does not seem that nature has to have anything

special to fulfill that law. The law basically amounts to just saying

that substance can't be magically multiplied, or saying that

mass-energy can't be created from nothing.

But

in the case of the law of the conservation of charge, we have a very

different situation. To fulfill this law, it would seem that nature

requires “something extra.”

First,

it must be stated that what is called the law of the conservation of

charge has a very poor name, very apt to give you the wrong idea. It

is not at all a law that prohibits creating additional electric

charges. In fact, when two protons collide together at very high

speeds at the Large Hadron Collider, we can see more than 70 charged

particles arise from a collision of only two charged particles (two

protons). So it is very misleading to state the law of the

conservation of charge as a law that charge cannot be created or

destroyed. The law should be called the law of the conservation of

net charge. The correct way to state the law is as I have

stated it above: the ratio between the proton-like charges (in other

words, positive charges) and the electron-like charges (in other

words, negative charges) in the outputs of a particle collision must

be the same as the ratio was in the inputs of the collision.

This

law, then, cannot work by a simple basis of “something can't be

created out of nothing.” It requires something much more:

apparently that nature have something like a concept of the net

charge of the colliding particles, and also that it somehow be able

to figure out a set of output particles that will have the same net

charge. The difficulty of this trick becomes apparent when you

consider that the same balancing act must be done when particles

collide at very high speeds, in a collision where there might be more

than 70 charged output particles.

I

may also note that for nature to enforce the law of the conservation of

charge (more properly called the law of the conservation of net

charge), it would seem to be a requirement that nature somehow in

some sense “know” or have the idea of an abstract concept – the

very concept of the net charge of colliding particles. The “net

charge" is something like “height/weight ratio” or “body mass

index,” an abstract concept that does not directly correspond to a

property of any one object. So we can wonder: how is it that blind

nature could have a universal law related to such an abstraction?

In

the case of the law of the conservation of baryon number, we also

have a law that seems to require something extra from nature. It

requires apparently that nature have some concept of the total baryon

number of the colliding particles, and also that it somehow be able

to figure out a set of output particles that will have the same

total baryon number. Again we have a case where nature seems to know

an abstract idea (the idea of total baryon number). But here the idea

is even more abstract than in the previous case, as it involves the

quite abstract notion of the

total of the protons and neutrons (minus the total of the antiprotons

and antineutrons). This idea is far beyond merely a physical property

of some particular particle, so one might be rather aghast that

nature seems to in some sense understand this idea and enforce a

universal law centered around it.

The

same type of comments can be made about the law of the conservation

of lepton number. Here we have a law of nature centered around a

concept that is even more abstract than the previous two concepts:

the notion of electron number, which involves regarding one set of particle types (including both charged and neutral particles) as positive, and another set of particle types (including both charged and neutral particles) as

negative. Here is a notion so abstract that a very small child could

probably never even hold it in his or her mind, but somehow nature

not only manages to hold the notion but enforce a law involving it

whenever two particles collide at high speeds.

The

examples of particle collisions given in the table above are simple,

but when particles collide at very high speeds, the outputs are

sometimes much, more complicated. There can be more than 50 particles

resulting from a high-speed proton collision at the Large Hadron

Collider. In such a case nature has to instantaneously apply at least

five laws, producing a solution set that has many different

constraints.

For

historical reasons, the nature of our current universe depends

critically on the laws described above. Even though these types of

high-speed relativistic particle collisions are rare on planet Earth

(outside of particle accelerators used by scientists), these types

of particle collisions take place constantly inside the sun. If the

laws above were not followed, the sun would not be able to consistently produce

radiation in the way needed for the evolution of life. In addition,

in the time immediately after the Big Bang, the universe was one big

particle collider, with all the particles smashing into each other at

very high speeds. If the laws listed above hadn't been followed, we

wouldn't have our type of orderly universe suitable for life.

By

now I have described in some detail the behavior of nature when

subatomic particles collide at high speeds. What words best describe such

behavior? I could use the word “fixed” and “regular,” but

those words don't go far enough in describing the behavior I have

described.

The

best words I can use to describe this behavior of nature when

subatomic particles collide at very high speeds are these words: programmatic and

conceptual.

The

word programmatic is defined by the Merriam Webster online

dictionary in this way: “Of, relating

to, resembling, or having a program.” This word is very appropriate

to describe the behavior of nature that I have described. It is just

as if nature had a program designed to insure that the balance of

positive and negative charges does not change, that the number of

protons plus the number of neutrons does not change, and that overall

lepton number does not change.

The

word conceptual is defined by the Merriam Webster online

dictionary in this way: “Based on or relating to ideas or

concepts.” This word is very appropriate to describe the behavior

of nature that I have described. We see in high-speed subatomic particle collisions that

nature acts with great uniformity to make sure that the final stable

output particles are one of the five types of particles in the list

above (protons, neutrons, photons, electrons, and neutrinos). It is

just as if nature had a clear idea of each of these things: the idea

of a proton, the idea of a neutron, the idea of a photon, the idea of

an electron, and the idea of a neutron. As nature has a law that

conserves net charge, we must also assume that nature has something

like the idea of net charge. As nature has a law that conserves

baryon number, we must also assume that nature has something like the

idea of baryon number. As nature has a law that conserves lepton

number, we must also assume that nature has something like the idea

of lepton number.

This

does not necessarily imply that nature is conscious. Something can

have ideas without being conscious. The US Constitution is not

conscious, but it has the idea of the presidency and the idea of

Congress.

So

given very important and fundamental behavior in nature that is both

highly conceptual and highly programmatic, what broader conclusion do

we need to draw? It seems that we need to draw the conclusion that

nature has programming. We are not forced to the conclusion that

nature is conscious, because an unconcious software program is both

conceptual and programmatic. But we do at least need to assume that

nature has something like programming, something like software.

Once

we make the leap to this concept, we have an idea that ends up being very

seminal in many ways, leading to some exciting new thinking about our

universe. Keep reading this blog to get a taste of some of this thinking.